Posted by Sam on Mar 12, 2008 at 12:00 AM UTC - 6 hrs

TeamCity is a build server from JetBrains that

I'm starting to like. It checks your code out, builds it, and runs your unit tests against the compiled source code (among other things), continuously integrating your code each time someone checks-in a change to the repository (or on-demand, if you'd like).

Oh, and it's a bit faster than CruiseControl as well (At least for me).

It's free for many applications - those where you won't use more than 20 user accounts, 20 build configurations, 3 build agents, and don't need anything more than the standard web-based authentication interface. (A build configuration is a way of building using a build agent - e.g., you could build based on a .NET solution file, ANT file, or many other ways. A build agent appears to be the computer itself, though I'm not sure of that yet.)

In any case, there is an "enterprise" license that costs a couple of thousand dollars to get you around most of those limitations. The only exception is the limitation to three build agents - you can buy extra licenses for that at $299 a pop. I can't foresee the need for even two, but that might be because I have yet to see all the functions they serve.

Anyway, if you've been paying attention at least partially, you'll know I'm a huge fan of the DRY principle, especially as it relates to source code. In that case, you wouldn't be surprised to learn that one of my favorite features is this:

I haven't yet found a way to make the build fail for duplication, but seeing the reports is nice.

I was also a bit disappointed it didn't catch code like this:

public int add(int x, int y) { return x+y; }

public int anotherAdd(int z, int a) { return z+a; }

But, it's nice to know it will at least catch copy-and-paste reuse.

I was set up and running my duplication checks in a few minutes, but finding everything you need is not as painless (setup is mostly painless, finding reasons for build failure and editing is not as easy). It's not hard, by any stretch of the imagination, but they set such a high standard on the "add" part that even the minor annoyances I encountered on the rest seemed large. A lot of that is being new not just to the technology, but some of the terminology they used as well.

Anyway, I've not dived in deep enough to see how or if it might work for other languages or platforms, but if you're using Java or .NET, I'd recommend you give it a try.

Hey! Why don't you make your life easier and subscribe to the full post

or short blurb RSS feed? I'm so confident you'll love my smelly pasta plate

wisdom that I'm offering a no-strings-attached, lifetime money back guarantee!

Posted by Sam on Jun 30, 2011 at 04:40 PM UTC - 6 hrs

I was introduced to the concept of making estimates in story points instead of hours back in the Software Development Practices course when I was in grad school at UH (taught by professors Venkat and Jaspal Subhlok).

I became a fan of the practice, so when I started writing todoxy to manage my todo list, it was an easy decision to not assign units to the estimates part of the app. I didn't really think about it for a while, but recently

a todoxy user asked me

What units does the "Estimate" field accept?

More...

My response was

The estimate field is purposefully unit-less. That's because the estimate field gets used in determining how much you can get done in a week, so you could think of it in hours, minutes, days, socks, difficulty, rainbows, or whatever -- just as long as in the same list you always think of it in the same terms.

A while back, Jeff Sutherland (one of the inventors of the Scrum development process) pointed out some of the reasons why story points are better than hours. It boiled down to four reasons:

- We are bad at estimating hours, but more consistent with points

- Hours tell us nothing since the best developer on the team may be multiple times faster than the worst

- It takes less time to estimate in points than hours

- "The management metric for project delivery needs to be a unit of production [because] production is the precondition to revenue ... [and] hours are expense and should be reduced or eliminated whenever possible"

But I noticed another benefit in my personal habits. Not only does it free us of the shackles of thinking in time and the poor estimates that come as a result, it corrects itself when you make mistakes.

I recognized this when I saw myself giving higher estimates for work I didn't really want to do. Like a contractor multiplying by a pain-in-the-ass factor for her worst customer, I was consistently going to fib(x+1) in my estimates for a project I wasn't enjoying.

But it doesn't matter. My velocity on that list has a higher number than on my other list, so if anything I hurt myself by committing to more work on it weekly for any items that weren't inflated.

What do you think about estimating projects in leprechauns?

Posted by Sam on Sep 04, 2008 at 12:00 AM UTC - 6 hrs

Outsourcing is not going away. You can delude yourself with myths of poor quality

and miscommunication all you want, but the fact remains that people are solving

those problems and making outsourcing work.

As Chad Fowler points out in

the intro to the section of MJWTI titled "If You Can't Beat 'Em", when a

company decides to outsource - it's often a strategic decision after much deliberation.

Few companies (at least responsible ones) would choose to outsource by the seat of their pants, and then change their

minds later. (It may be the case that we'll see some reversal, and then more, and then less, ... , until an equilibrium is reached - this is still new territory for most people, I would think.)

Chad explains the situation where he was sent to India to help improve the offshore team there:

If [the American team members] were so good, and the Indian team was so "green," why the hell

couldn't they make the Indian team better? Why was it that, even with me

in India helping, the U.S.-based software architects weren't making a dent

in the collective skill level of the software developers in Bangalore?

The answer was obvious. They didn't want to. As much as they professed

to want our software development practices to be sound, our code to be

great, and our people to be stars, they didn't lift a finger to make it so.

These people's jobs weren't at risk. They were just resentful. They were

holding out, waiting for the day they could say "I told you so," then come

in and pick up after management's mess-making offshore excursions.

But that day didn't come. And it won't.

The world is becoming more "interconnected," and information and talent crosses borders easier than it has in the past.

And it's not something unique to information technologists - though it may be the easiest to pull-off in that realm.

So while you lament that people are losing their jobs to cheap labor and then demand higher minimum wages, also keep in mind that you should be trying to do something about it. You're not going to reverse the outsourcing trend with

any more success than record companies and movie studios are going to have stopping peer-to-peer file sharing.

That's right. In the fight over outsourcing, you, the high-paid programmer, are the big bad RIAA and those participating in the outsourcing are the Napsters. They may have succeeded in shutting down Napster, but in the fight against the idea of Napster, they've had as much strategic success as the War on Drugs (that is to say, very little, if any). Instead of fighting it, you need to find a way to accept it and profit from it - or at least work within the new parameters.

How can you work within the new parameters? One way is to " Manage 'Em." Chad describes several characteristics that you need to have to be successful with an offshore development team, which culminates in a "new kind" of

PM:

What I've just described is a project manager. But it's a new kind of project

manager with a new set of skills. It's a project manager who must act at

a different level of intensity than the project managers of the past. This

project manager needs to have strong organizational, functional, and technical

skills to be successful. This project manager, unlike those on most

onsite projects, is an absolutely essential role for the success of an offshore-developed

project.

This project manager possesses a set of skills and innate abilities that are

hard to come by and are in increasingly high demand.

It could be you.

Will it be?

Chad suggests learning to write "clear, complete functional and technical specifications," and knowing how to write use cases and use UML. These sorts of things aren't flavor-of-the-month with Agile Development, but in this context, Agile is going to be hard to implement "by the book."

Anyway, I'm interested in your thoughts on outsourcing, any insecurities you feel about it, and what you plan to do about them (if anything). (This is open invitation for outsourcers and outsourcees too!) You're of course welcome to say whatever else is on you mind.

So, what do you think?

Posted by Sam on Sep 12, 2008 at 12:00 AM UTC - 6 hrs

If we accept the notion that we need to figure out how to work with outsourcing

because it's more likely to increase than decrease or stagnate, then it would be beneficial for us to become

"Distributed Software Development Experts" (Fowler, pg 169).

To do that, you need to overcome challenges associated

with non-colocated teams that exceed those experienced by teams who work in the same geographic location.

Chad lists a few of them in this week's advice from

My Job Went To India (I'm not quoting):

More...

-

Communication bandwidth is lower when it's not face to face. Most will be done through email,

so most of it will suck comparatively.

-

Being in (often widely) different time zones means synchronous communication is limited to few overlapping

hours of work. If you get stuck and need an answer, you stay stuck until you're in one of those overlaps.

That sucks.

-

Language and cultural barriers contribute to dysfunctional communication. You might need an accent to accent

translator to desuckify things.

-

Because of poor communication, we could find ourselves in situations where we don't know what each other

is doing. That leads to duplicative work in some cases, and undone work in others. Which leads to

more sucking for your team.

The bad news is that there's a lot of potential to suck. The good news is there's already a model

for successful and unsuccessful geographically distributed projects: those of open source.

You can learn in the trenches by participating. You can find others' viewpoints on successes and

failures by asking them directly, or by reviewing

open source project case studies.

Try to think about the differences and be creative with ways to address them.

Doing that means you'll be better equipped to cope with challenges inherent

with outsourced development. And it puts you miles ahead of your bitchenmoaning colleagues who end

up trying to subvert the outsourcing model.

Posted by Sam on May 19, 2009 at 12:00 AM UTC - 6 hrs

This is the tenth and final post in a

series of answers to

100 Interview Questions for Software Developers.

The list is not intended to be a "one-size-fits-all" list.

Instead, "the key is to ask challenging questions that enable you to distinguish the smart software

developers from the moronic mandrills." Even still, "for most of the questions in this list there are no

right and wrong answers!"

Keeping that in mind, I thought it would be fun for me to provide my off-the-top-of-my-head answers,

as if I had not prepared for the interview at all. Here's that attempt.

Though I hope otherwise, I may fall flat on my face. Be nice, and enjoy (and help out where you can!).

More...

- How many of the three variables scope, time and cost can be fixed by the customer?

Two. (See The 'Broken Iron Triangle' for a good

discussion.)

- Who should make estimates for the effort of a project? Who is allowed to set the deadline?

The team tasked with implementing the project should make the estimates. The deadline can be set by

the customer if they forego choosing the cost or scope. There are cases where the team should set the deadline.

One of these is if they are working concurrently on many projects, the team can give the deadline to management,

with the knowledge that priorities on other projects can be rearranged if the deadline for the new project

needs to be more aggressive than the team has time to work on it.

Otherwise, I imagine management is

free to set it according to organizational priorities.

- Do you prefer minimization of the number of releases or minimization of the amount of work-in-progress?

I generally prefer to minimize the amount of work on the table, as it can be distracting to

- Which kind of diagrams do you use to track progress in a project?

I've tended to return to the burndown chart time after time. Big visible charts

has some discussion of different charts that can be used to measure various metrics of your project.

- What is the difference between an iteration and an increment?

Basically, an iteration is a unit of work and and increment is a unit of product delivered.

- Can you explain the practice of risk management? How should risks be managed?

I don't know anything about risk management formally, but I prefer to to deal with higher risk items first

when possible.

- Do you prefer a work breakdown structure or a rolling wave planning?

I have to be honest and say I don't know what you're talking about. Based on the names, my guess would be

that "work breakdown structure" analyzes what needs to be done and breaks it into chunks to be delivered

in a specific order, whereas rolling wave may be more like do one thing and then another, going with the flow.

Wikipedia shows what I had in mind about WBS,

while pmcrunch.com has info on RWP.

After reading about RWP I realized that I had already known about it - it was just buried deep in my memory.

In any case, I would think like most everyone else that I'd prefer the work breakdown structure, but it's

unrealistic in most projects (repetitive projects could use it very successfully, for instance). Therefore,

I'll take the rolling wave over WBS please.

- What do you need to be able to determine if a project is on time and within budget?

Just the burndown chart, if it's been created out of truthful data.

- Can you name some differences between DSDM,

Prince2

and Scrum?

I'm not at all familiar with Prince2, so I can't talk intelligently about it. DSDM is similar to Scrum in that

both stress active communication with and involvement of the customer, as well as iterative and incremental

development. I'm not well versed in DSDM, but from what little I've heard, it sounds a bit more prescriptive than

Scrum.

I'd suggest reading the Wikipedia articles to get a broad overview of these subjects - they are decent starters.

It would be nice if there were a book that compared and contrasted different software development

methodologies, but in the absence of such a book, I guess you have to read one for each.

- How do you agree on scope and time with the customer, when the customer wants too much?

Are they willing to pay for it? If they get too ridiculous, I'd just have to tell them that I can't do what they're asking

for and be able to pay my developers. Hopefully, there would be some convincing that worked before it came to

that point, since we don't want to risk losing customers. However, I must admit that I don't have any strategies

for this. I'd love to hear them, if you have some.

There are a couple of stories you can tell:

- about 9 women having one baby in just one month. (Fred Brooks)

- about your friend with an interesting first date philosophy (Venkat Subramaniam)

How would you answer these questions about project management?

Posted by Sam on May 21, 2009 at 12:00 AM UTC - 6 hrs

For the last few months, I've been having trouble getting out of "next week" mode.

That's what I call it when I don't know what I'll be working on outside of the next week at any given time.

It's not necessarily a bad thing, but when you're working on projects that take longer than a couple of weeks,

it doesn't let you keep the end in sight. Instead, you're tunneling through the dirt and hoping you've been digging

up instead of down.

More...

I've delivered most projects during this period on schedule, but I did cave into pressure to

over-promise and under-deliver on one occasion. And it sucked.

When I wrote that

rewarding heroic development

promotes bad behavior, I said reducing the risk and uncertainty of project delivery

is the subject of a different story, and the discussion in the comments

got me thinking about this. There are many stories worth telling regarding this issue.

The rest of this story is about how I'm intending to get out of my funk using

techniques that have worked for me in the past.

(Aside: As I write the words below, it occurs to me we have a chicken/egg problem of which comes first.

Just start somewhere.)

To make decent estimates there are 3 must-haves:

- Historical data as to how much you can complete in a given time frame

- Backlog of items you need to complete in the time frame you're wanting to estimate for

- The ability to break requests into sweet, chunky, chewy, bite-sized morsels of estimable goodness.

Since you haven't been doing this ["ever", "in a while"][rand*2], you don't have historical data. Your backlog

is anything on your table that hasn't been completed yet - so you've got that. Now, you need to break your

backlog apart into small enough bits to estimate accurately. This way, you practice the third item and

in a couple of weeks, you'll have historical data.

About estimating tasks:

Don't worry about estimating in units of time. You're probably not good at it. Most of us aren't, and you haven't

even given it a fair shot with some data to back up your claims. Measure in points or tomatoes. Provide your estimate

in chocolate chips. The unit of measurement doesn't matter at this point, so pick something that

makes you happy. However, you should stay away from units of time at this point in the exercise. You're not

good at that, remember?

So I have some number of tasks that need to be completed. I write each of them down, and decide how many chocolate

chips it's going to take me to finish each one. I count in Fibonacci numbers instead of counting numbers, because

as tasks grow in time and complexity, estimates grow in uncertainty. I try to keep all of my tasks as 1, 2, or 3

chocolate chips. Sometimes I'll get up to a 5.

But if you start venturing into 8 and 13 or more, you're basically saying

IDFK anyway, so you might as well be honest and bring that out into the open. Such tasks are more like

Chewbaccas than chocolate chips, so take some time to think about

how you might break them down as far as possible.

photo by Bonnie Burton found on starwarsblog photostream licensed CC Attribution 2.0 as of 5/21/2009

Now that you know how to estimate tasks:

photo by Bonnie Burton found on starwarsblog photostream licensed CC Attribution 2.0 as of 5/21/2009

Now that you know how to estimate tasks:

Before you start on a task -- with a preference to earlier rather than later (hopefully as soon as you know it needs to be done) --

estimate how many points it should take you, then write it down on your list of items to complete. Take note

of how many chocolate chips you finish daily. Write down the number completed and the date.

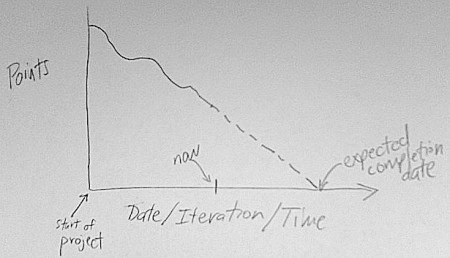

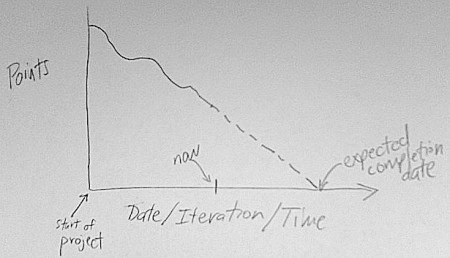

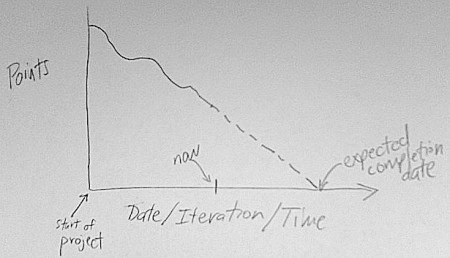

Make a graph over time comparing the number of chocolate chips you have remaining (or how many you've completed)

on the Y-axis and the date that applied to. If you use points remaining, it's a Burn Down chart. If you

go the otherway, it's not surprisingly called a Burn Up chart.

Keep a log of the number of chips you complete per week. The last two or three weeks' averages are a good indication

of how many you'll be able to do for the next few weeks, and helps planning for

individuals spanning several projects, or teams on a single project.

You can now reference your chips per week to extrapolate how long it's likely to take you to finish a particular

task or small project.

Further, you'll always want to know how many points you've got in your backlog and how many you need to

complete by a given date. If you keep a log of due dates you can reference it and your points per weeks

when someone asks you when you can have something done. Now, you can say "I can start on the 26th or you

can rearrange the priorities on my current work and I can be done by the end of the day."

Any questions? As always, I'm happy to answer them.

The majority of these ideas are scrum-thought and I've used terms from that methodology, so if you want to

go deeper, that would be a good place to look.

What is do you do for planning and estimation?

Posted by Sam on Feb 18, 2008 at 06:43 AM UTC - 6 hrs

Last week, hgs asked,

I find it interesting that lots of people write about how to produce clean code,

how to do good design, taking care about language choice, interfaces, etc, but few people

write about the cases where there isn't time... So, I need to know what are the forces that tell you

to use a jolly good bodge?

I suspect we don't hear much about it because these other problems are often caused by that excuse.

And, in the long run, taking on that technical debt will likely cause you to go so slow that that's the

more interesting problem. In other words, by ignoring the need for good code, you are jumping into

a downward spiral where you are giving yourself even less time (or, making it take so long to do anything

that you may as well have less time).

More...

I think the solution is to start under-promising and over-delivering, as opposed to how most of us do it

now: giving lowball estimates because we think that's what they want to hear. But why lie to them?

If you're using iterative and incremental development, then if you've over-promised one iteration, you

are supposed to dial down your estimates for what you can accomplish in subsequent iterations, until

you finally get good at estimating. And estimates should include what it takes to do it right.

That's the party-line answer to the question. In short: it's never OK to write sloppy code, and

you should take precautions against ever putting yourself in a situation where those

viscous forces pull you in that direction.

The party-line answer is the best answer, but it doesn't fully address the question, and I'm not

always interested in party-line answers anyway. The viscosity (when it's easier

to do the wrong thing that the right thing) is the force behind the bodge. I don't like it, but I

recognize that there are going to be times you run into it and can't resist.

In those cases where you've already painted yourself into a corner, what then? That's the interesting

question here. How do you know the best

places to hack crapcode together and ignore those things that may take a little longer in the short run, but

whose value shows up in the long run?

The easy answer is the obvious one: cut corners in the code that is least likely to need to change or

be touched again. That's because (assuming your hack works) if we don't have to look at the code again,

who really cares that it was a nasty hack? The question whose answer is not so easy or

obvious is "what does such a place in the code look like?"

By the definition above, it would be the lower levels of your code. But if you do that, and inject a bug, then

many other parts of your application would be affected. So maybe that's not the right place to do it.

Instead, it would be better to do it in the higher levels, on which very little (if any) other code

depends. That way, you limit the effects of it. More importantly, if there are no outgoing dependencies

on it, it is easier to change than if other code were highly dependent on it. [ 1]

Maybe the crapcode can be isolated: if a class is already aweful, can you derive a new class from it and

make any new additions with higher quality? If a class is of high quality and you need to hack something together,

can you make a child class and put the hack there? [ 2]

Uncle Bob recently discussed when unit and acceptance

testing may safely be done away with. He put those numbers around 10 and a few thousand lines of code,

respectively.

In the end, there is no easy answer that I can find where I would definitively say, "that's the place for a bodging."

But I suspect there are some patterns we can look for, and I tried to identify a couple of those above.

Do you have any candidates you'd like to share?

Notes:

[1] A passing thought for which I have no answers:

The problem with even identifying those places is that by hacking together solutions, you are more likely

to inject defects into the code, which makes it more likely you'll need to touch it again.

[2] I use inheritance here because the new classes should be

able to be used without violating LSP.

However, you may very well be able to make those changes by favoring composition.

If you can, I'd advocate doing so.

Posted by Sam on Dec 12, 2007 at 08:22 AM UTC - 6 hrs

I'm not talking about Just-In-Time compilation. I'm talking about JIT

manufacturing.

When you order furniture, it likely gets assembled only after the order was received.

Toyota is famous for doing it with cars.

You can do it yourself with JIT published books.

On top of that, Land's End offers custom tailored, JIT manufactured clothing.

It's easy to say, "yes, we can publish software in the same manner." Every time we offer a

download, it's done just in time. This post was copied and downloaded (published) at the

moment you requested it.

That's not what I'm talking about either.

More...

A disclaimer: I've yet to read the relevant book ( Lean Software Development: An Agile Toolkit)

from the Poppendieck's that brought Lean to

software. They may have covered this before.

What my question covers is this: Can we think of an idea that would be repeatable, sell

it to customers to fund the project, and then deliver it when it's done? (It should be sold to

many customers, as opposed to custom software, which is, for the most part, already developed in that manner.)

In essence, can we pre-sell vaporware?

We already pre-sell all types of software - but that software is (presumably) nearing a

releasable state (I've had my doubts about some of it). Can we take it to the next level

and sell something which doesn't yet exist?

If such a thing is possible, there are at least three things you'll need to

be successful (and I bet there are more):

- A solid reputation for excellence in the domain you're selling to, or a salesperson with

such a reputation, and the trust that goes with it.

- A small enough idea such that it can be implemented in a relatively short time-frame. This, I

gather, would be related to the industry in which you're selling the software.

- An strong history of delivering products on time.

What do you think? Is it possible? If so, what other qualities do you need to possess to

be successful? If not, what makes you skeptical?

Last modified on Dec 12, 2007 at 08:23 AM UTC - 6 hrs

Posted by Sam on Nov 26, 2007 at 06:08 AM UTC - 6 hrs

Last week I posted about why software developers should care about process, and

how they can improve themselves by doing so. In the comments, I promised to give a review of

what I'm doing that seems to be working for me. So here they are - the bits and pieces that work for me.

Also included are new things we've decided to try, along with some notes about what I'd like to

attempt in the future.

More...

Preproject Considerations

Most of our business comes through referrals or new projects from existing customers.

Out of those, we try only to accept referrals or repeat business from

the " good clients," believing

their friends will be similarly low maintenance, high value, and most importantly, great to work with.

We have tried the RFP circuit in the past, and recently considered

going at it again. However, after a review of our experiences with it, we felt that unless you are the cause of the RFP

being initiated, you have a subatomically small chance of being selected for the project (we've been on both

ends of that one).

Since it typically takes incredible effort to craft a response, it just seems like a waste of hours

to pursue.

On the other hand, we are considering creating a default template and using minimal

customization to put out for future RFPs, and even then, only considering ones that have a very

detailed scope, to minimize our effort on the proposal even further.

We're also trying to move ourselves into the repeatable solutions space - something that really takes the

cheap manufacturing ability we have in software - copying bits from one piece of hardware storage to another -

and puts it to good use.

Finally, I'm very interested to hear how some of you in the small software business world bring in business.

I know we're technically competitors and all, but really, how can you compete with

this?

The Software Development Life Cycle

I won't bother you by giving a "phase" by phase analysis here. Part of that is because I'm not sure

if we do all the phases, or if we're just so flexible and have such short iterations the phases seem to bleed

together. (Nor do I want to spend the time to figure out which category what each thing belongs in.)

Depending on the project, it could be either. Instead, I'll bore you with what we do pretty

much every time:

At the start of a project

At the start of a project, we sit down with client and take requirements. There's nothing fancy here.

I'm the coder and I get involved - we've found that it's a ridiculous waste of time to pass

my questions through a mediator and wait two weeks to get an answer. Instead, we take some paper or

cards and pen, and dry erase markers for the whiteboard. We talk through of what the system should do at a high level,

and make notes of it.

We try to list every feature in terms of the users who will perform it and it's reason for existence.

If that's unknown, at least we know the feature, even if we don't know who will get to use it or why

it's needed. All of this basically gives us our "use cases,"

without a lot of the formality.

I should also note that, we also do the formal bit if the need is there, or if the client wants to

work that way. But those meetings can easily get boring, and when no one wants to be there, it's not

an incredibly productive environment. If we're talking about doing the project in Rails or ColdFusion,

it often takes me longer to write a use case than it would to implement

the feature and show it to the client for feedback, so you can see why it might be

more productive to skip the formality in cases that don't require it.

After we get a list of all the features we can think of, I'll get some rough estimates of points

(not hours) of each feature to the client, to give them an idea of the relative costs for each feature.

If there is a feature which is something fairly unrelated to anything we've had experience with, we give

it the maximum score, or change it to an "investigate point cost," which would be the points we'd need

to expend to do some research to get a better estimate of relative effort.

Armed with that knowledge, they can then give me a prioritized list of the features they'd like to see

by next Friday when I ask them to pick X number of points for us to work on in the next week. Then

we'll discuss in more detail those features they've chosen, to get a better idea of exactly what it is

they're asking for.

We repeat that each iteration, adjusting the X number of points the client

gets to choose based on what was actually accomplished the previous iteration - if there was spare time,

they get a few more points. If we didn't finish, those go on the backlog and the client has fewer points

to spend. Normally, we don't have the need for face to face meetings after the initial one, but I prefer

to have them if we can. We're just not religious about it.

Whiteboards at this meeting are particularly useful, as most ideas can be illustrated quite quickly, have

their picture taken, and be erased when no longer needed. Plus, it lets everyone get involved when we start

prioritizing. Notecards are also nice as they swap places with each other with incredible ease.

Within each iteration,

we start working immediately. Most of the time, we have one week iterations, unless there are a couple of projects going on -

then we'll go on two week iterations, alternating between clients. If the project is relatively stable,

we might even do daily releases. On top of that,

we'll interface with client daily if they are available that frequently, and if there is something to show.

If the project size warrants it, we (or I) track our progress in consuming points on a burndown chart.

This would typically be for anything a month or longer. If you'll be mostly done with a project in a week,

I don't see the point in coming up with one of these. You can set up a spreadsheet to do all the calculations

and graphing for you, and in doing so you can get a good idea of when the project will actually

be finished, not just some random date you pull out of the air.

Another thing I try to be adamant about is insisting the client start using the product as soon as it

provides some value. This is better for everyone involved. The client can realize ROI

sooner and feedback is richer. Without it, the code is not flexed as much. Nor do you get to see what

parts work to ease the workload and which go against it as early in the product's life, and that makes changes more difficult.

For us, the typical client has been willing

to do this, and projects seem to devolve into disaster more readily when they don't.

Finally, every morning we have our daily stand-up meeting. Our company is small enough so that we can

talk about company-wide stuff, not just individual projects. Each attendee answers three questions:

- What did you do yesterday?

- What are you going to do today?

- What is holding you back

The meeting is a time-conscious way (15 minutes - you stand so you don't get comfortable) to keep

us communicating. Just as importantly, it keeps us accountable to each other, focused on setting

goals and getting things

done, and removing obstacles that get in our way.

On the code side of things, I try to have unit tests and integration tests for mostly everything.

I don't have automated tests for things like games and user interfaces. I haven't seen much detriment

from doing it this way, and the tradeoff for learning how to do it doesn't seem worth it at the moment.

I would like to learn how to do it properly and make a more informed decision though. That

will likely come when time is not so rare for me. Perhaps when I'm finished with school

I'll spend that free time learning the strategies for testing such elements.

Luckily, when I'm working on a ColdFusion project, cfrails is pretty well tested so I get to skip a lot

of tests I might otherwise need to write.

By the same token, I don't normally unit test one-off scripts, unless there are obvious test cases I can

meet or before doing a final version that would actually change something.

I don't know how to do it in CF, but when I've use continuous integration tools for Java projects it has been

helpful. If you have good tests, the CI server will

report when someone checks in code that breaks the tests. This means bad code gets checked in less often.

If you don't have the tests to back it up, at least you'll feel comfortable knowing the project builds

successfully.

For maintenance, we normally don't worry about using a project management tool to track issue.

Bugs are fixed as they are reported - show stoppers immediately, less important within the day, and things deemed

slight annoyances might take a couple of days. I'd like to formalize our response into an actual policy, though.

Similarly, new requests are typically handled within a couple of days if they are small and I'm not

too busy - otherwise I'll give

an estimate as to when I can have it done.

With bugs in particular, they are so rare and few in number

that I could probably track them in my head. Nevertheless, I mark an email with my "Action Required" tag,

and try my best to keep that folder very small. Right now I've overcommitted myself and the folder isn't

empty, but there was a time recently that it remained empty on most nights.

In any event, I normally only use project management tools for very large projects or those I inherited

for some reason or another.

Summary

If you're a practitioner, you can tell the ideas above are heavily influenced by (when not directly part of)

Scrum and Extreme Programming. I wouldn't call what we're doing by either of their names. If you're not familiar

with the ideas and they interest you, now you know where to look.

Where would we like to go from here?

One thing that sticks out immediately is client-driven automated testing with Selenium or FIT.

I'd also like to work for several months on a team that does it all and does it right,

mostly to learn how I might better apply things I've learned, heard of, or yet to be exposed to.

What else? That will have to be the subject of another post, as this one's turned into a book.

Thoughts, questions, comments, and criticisms are always welcome below.

Last modified on Nov 26, 2007 at 06:16 AM UTC - 6 hrs

Posted by Sam on Nov 23, 2007 at 11:11 AM UTC - 6 hrs

In this week's advice from MJWTI,

"The Way That You Do It," Chad Fowler talks about process and methodology in software development. One quote

I liked a lot was:

It's much easier to find someone who can make software work than it is to find someone who can make the

making of software work.

Therefore, it would behoove us to learn a bit about the process of software development.

More...

I never used to have any sort of process. We might do a little requirements gathering, then code everything

up, and show it to the customer a couple of months later. They'd complain about some things and offer

more suggestions, then whoever talked to them would try to translate that to me, probably a couple of

weeks after they first heard it. I'd implement my understanding of the new requirements or fixes, then

we'd show it to the customer and repeat.

It was roughly iterative and incremental, but highly dysfunctional.

I can't recall if it was before or after reading this advice, but it was around the time nevertheless that I

started reading and asking questions on several of the agile

development mailing

lists.

Doing that has given me a much better understanding of how to deliver higher quality, working software on a timely

basis. We took a little bit from various methodologies and now have a better idea of when software will

be done, and we interface with the customer quite a bit more - and that communication is richer than ever

now that I involve myself with them (most of the time, anyway). We're rolling out more things as time goes

along and as I learn them.

I'd suggest doing the same, or even picking up the canonical books on different methodologies and reading

through several of them. I haven't done the latter quite yet, but it's definitely on my list of things to do.

In particular, I want to expose myself to some non-Agile methods, since most of my knowledge comes from

the Agile camp.

Without exposing yourself to these ideas, it would be hard to learn something useful from them.

And you don't have to succumb to the dogma - Chad mentions (and I agree) that it would be sufficient to

take a pragmatic approach - that "the best process to follow is the one that makes your team the most

productive and results in the best products." But it is unlikely you will have a "revelationary epiphany"

about how to mix and match the pieces that fit your team. You've got to try them out, "and continuously refine

them based on real experience."

I don't think it would be a bad idea to hire a coach either (if you can afford one - or maybe you

have a friend you can go to for help?), so you've got someone to tell you if you're doing

it the wrong way. If you have a successful experiment, you probably did it the right way. But you won't

likely know if you could get more out of it. The same is said of doing it the wrong way - you may be

discarding an idea that could work wonders for you, if only you'd done it how it was meant to be.

In the end, I like a bit of advice both Venkat and Ron Jeffries

have given: You need to learn it by doing it how it was meant to be done. It's hard to pick and choose different practices without

having tried them. To quote Ron,

My advice is to do it by the book, get good at the practices, then do as you will. Many people want to skip to step three. How do they know?

Do you have any methodological horror stories or success stories? I'd love to hear them!

Update: Did I really spell "dysfunctional" as "disfunctional" ? Yup. So I fixed that and another spelling change.

Last modified on Nov 24, 2007 at 06:17 AM UTC - 6 hrs

Posted by Sam on Jul 30, 2007 at 02:43 PM UTC - 6 hrs

Mingle is a project management tool that is supposed to be the first commercial product running on JRuby on Rails, and ThoughWorks just announced its release.

I first heard of it back at NFJS about a month ago, and went online to get a preview copy. I had hoped to review it on the blog, but that never got done. In any case, you can now get a copy and try it for yourself. It's probably pretty good. I mean, it is from ThoughtWorks.

Posted by Sam on Jul 30, 2007 at 01:28 PM UTC - 6 hrs

A while ago Ted Neward was reading The 33 Strategies of War and decided to relate those strategies of war to software development.

This weekend he put up the first in the series of 33: Declare War on Your Enemies (The Polarity Strategy).

In any case, I like the idea. Having had some education in politics and computer science, this appealed to me even more than it might have, but I'd think I'd recommend reading it anyway.

My favorite quote?

We will not ship code that fails to pass any unit test... Well, then, we'll not write any unit tests, and we'll have met that goal!

What do you think of viewing software projects as conflict?

Posted by Sam on Jun 25, 2007 at 05:36 PM UTC - 6 hrs

A phrase that Venkat likes to use sums up my view on software religions: "There are no best practices. Only better practices." That's a powerful thought on its own, but if you want to make it a little more clear, I might say "There are no best practices. Only better practices in particular contexts." This concept tinges my everyday thinking.

As I mentioned before, I'm blind to rules that "always apply" in software development. This post about fundamentalism in software methodology got me thinking about a post I had meant to write up a while ago, which basically turned into this one.

More...

The quote that got me was right at the beginning: someone "suggested that he'd like to ban the use of the term, 'Best Practices,' given that it's become something of a convenient excuse that IT professionals use to excuse every insane practice under the sun, regardless of its logical suitability to the business or environment." I would have added, "or its illogical absurdity given the business or environment."

Certainly, it is nice to have shortcuts that allow you to easily make decisions without thinking too long about them. Likewise, it's good to know the different options available to you.

"Best practices" is too much of a shortcut. If something is a "best practice," it implies there is no better practice available. All investigation for better solutions and thought about room for improvement ceases to exist. If something is a "best practice" and it is getting in your way, you have to follow it. It is the best, after all, and you wouldn't want to implement a sub-par solution when a better one exists, would you?

On the other hand, "better practices in specific contexts" gives you shortcuts, but it still allows you to think about something better. If I'm having trouble implementing what I thought was the preferred solution, perhaps it is time to investigate another solution. Am I really in the same context as the "practice" was intended for? It keeps things in perspective, without giving you the opportunity to be lazy about your decision-making process.

So my best practice for making decisions is recognition that there is no best practice. That's sort of like how I know more than you because I recognize I know nothing. So try hard not to fall into the trap that is "best practice." Avoid idolatry and sacred cows in software development. Aside from programming an application for a religious domain, there's just not much use for worship in programming. There's certainly no room for worshiping particular ways of doing software.

What do you think?

Posted by Sam on Jun 25, 2007 at 05:35 PM UTC - 6 hrs

There is a seemingly never-ending debate (or perhaps unconnected conversation and misunderstandings) on whether or not the software profession is science or art, or specifically whether "doing software" is in fact an engineering discipline.

Being the pragmatist (not a flip-flopper!) I aspire to be, and avoiding hard-and-fast deterministic rules on what exactly is software development, I have to say: it's both. There is a science element, and there is an artistic element. I don't see it as a zero-sum game, and its certainly not worthy of idolatry. ( Is anything?)

More...

That said, I have to admit that lately I've started to give more weight to the "artistic" side than I previously had. There are just so many "it depends" answers to software development, that it seems like an art. But how many of the "it depends"-es are really subjective? Or, is art even subjective in the first place? Then today I remembered reading something long ago in a land far far away: that the prefix every engineer uses when answering a question is "it depends."

I think almost certainly there is much more science and engineering involved in what we do than art. But, I think there is a sizable artistic element as well. Otherwise we wouldn't use terms like "hacked a solution" (as if hacking through a jungle, not so much the positive connotation of hackers hacking), or "elegant code" or "elegant design." Much of design, while rooted in principles (hopefully, anyway), can be viewed as artistic as well.

The land far away and the time long ago involved myself as an Electrical Engineering in training. I dropped out of that after couple of years, so I don't have the full background, but most of what we were learning were the laws governing electrical circuits, physics, magnetism, and so on (I guess it's really all physics when you get right down to it). Something like that would lead you to believe that if we call this software engineering, we should have similar laws. It's not clear on the surface that we do.

But Steve McConnell posted a good rundown the other day about why the phrase "Writing and maintaining software are not engineering activities. So it's not clear why we call software development software engineering" misses the point completely.

In particular, we can treat software development as engineering - we've been doing so for quite some time. Clearly, "engineering" has won the battle. Instead, Steve lists many different questions that may be valuable to answer, and also describes many of the ways in which software development does parallel engineering.

So what do you think? In what ways is software development like engineering? In what ways is it like art?

Posted by Sam on Jun 12, 2007 at 09:33 AM UTC - 6 hrs

Many of us know the value of deferring commitment until the last responsible moment (in the past, I've always said "possible," but "responsible" conveys the meaning much clearer, I think). In fact, it is the underlying principle of YAGNI, something more of us are familiar with.

The argument goes something like: If you do not wait to make decisions until they need to be made, you are making them without all of the information that will be available to you at the time it does need to be made. Now, you may get lucky and be right, but why not just wait until the decision has to be made so you can be sure you have all the information you can get?

I find it to be very sound advice.

I missed this the other day on InfoQ, but they have an article by Chris Matts and Olav Maassen entitled " 'Real Options' Underlie Agile Practices" that explains the thinking behind this in detail, relating it to Financial Option Theory and some psychology. It's a good article explaining how "Real Options" forms the basis of A/agile thinking, and had some ideas I hadn't heard before about keeping your best developers free as they represent more "options" and you shouldn't commit them too early. Also, they can create options by mentoring less experienced developers (through pairing perhaps). There's a lot more than this simple summary, and I consider it worth a read.

Any thoughts?

Posted by Sam on Jun 11, 2007 at 09:52 AM UTC - 6 hrs

Want to get a report of a certain session? I'll be attending No Fluff Just Stuff in Austin, Texas at the end of June. So, look at all that's available, and let me know what you'd like to see.

I haven't yet decided my schedule, and it's going to be tough to do so. I'm sure to attend some Groovy and JRuby sessions, but I don't know which ones. In any case, I want to try to leave at least a couple of sessions open for requests, so feel free to let me know in the comments what you'd like to see. (No guarantees though!). Here's what I'm toying with so far (apologies in advance for not linking to the talks or the speakers' blogs, there are far too many of them =)):

More...

First set of sessions: No clue. Leaning toward session 1, Groovy: Greasing the Wheels of Java by Scott Davis.

Second set: 7 (Groovy and Java: The Integration Story by Scott Davis), 8 (Java 6 Features, what's in it for you? by Venkat Subramaniam), 9 (Power Regular Expressions in Java by Neal Ford), or 11 (Agile Release Estimating, Planning and Tracking by Pete Behrens). But, no idea really.

Third set: 13 (Real World Grails by Scott Davis), 15 (10 Ways to Improve Your Code by Neal Ford), or 17 (Agile Pattern: The Product Increment by Pete Behrens). I'm also considering doing the JavaScript Exposed: There's a Real Programming Language in There! talks in the 2nd and 3rd sets by Glenn

Vanderburg.

Fourth set: Almost certainly I'll be attending session 20, Drooling with Groovy and Rules by Venkat Subramaniam, which will focus on declarative rules-based programming in Groovy, although Debugging and Testing the Web Tier by Neal Ford (session 22) and Java Performance Myths by Brian Goetz (session 24) are also of interest to me.

Fifth set: Again, almost certainly I'll go to Session 27, Building DSLs in Static and Dynamic Languages by Neal Ford.

Sixth set: No clue - I'm interested in all of them.

Seventh set: Session 37, Advanced View Techniques With Grails by Jeff Brown and Session 39, Advanced Hibernate by Scott Leberknight, and Session 42, Mocking Web Services by Scott Davis all stick out at me.

Eighth set: Session 47, Pragmatic Extreme Programming by Neal Ford, Session 46, What's New in Java 6 by Jason Hunter, Session 45, RAD JSF with Seam, Facelets, and Ajax4jsf, Part One by David Geary, or Session 44, Enterprise Applications with Spring: Part 1 by Ramnivas Laddad all seem nice.

Ninth set: Session 50, Enterprise Applications with Spring: Part 2 by Ramnivas Laddad or Session 51, RAD JSF with Seam, Facelets, and Ajax4jsf, Part Two by David Geary are appealing, which probably means the eight set will be the part 1 of either of these talks.

Tenth set: Session 59, Productive Programmer: Acceleration, Focus, and Indirection by Neal Ford is looking to be my choice, though the sessions on Reflection, Spring/Hibernate Integration Patterns, Idioms, and Pitfalls, and NetKernel (which "turns the wisdom" of "XML is like lye. It is very useful, but humans shouldn't touch it," "on its head" all interest me.

Final set: Most probably I'll go to Session #65: Productive Programmer: Automation and Canonicality by Neal Ford.

As you can see, there's tons to choose from - and that's just my narrowed down version. So much so, I wonder how many people will leave the symposium more disappointed about what they missed than satisfied with what they saw =).

Anyway, let me know what you'd like to see. Even if its not something from the list I made, I'll consider it, especially if there seems to be enough interest in it.

Last modified on Jun 11, 2007 at 09:58 AM UTC - 6 hrs

Posted by Sam on May 30, 2007 at 08:14 AM UTC - 6 hrs

Kenji HIRANABE (whose signature reads "Japanese translator of 'XP Installed', 'Lean Software Development' and 'Agile Project Management'") just posted a video (with English subtitles) of himself, Matz (creator of Ruby), and Kakutani-san (who translated Bruce Tate's "From Java To Ruby" into Japanese) to his blog. According to Matz (if I heard correctly in the video), Kenji's a "language otaku," so his blog may be worth checking out for all you other language otakus (though, from my brief look at it, it seems focused between his company's product development and more general programming issues).

In any case it's supposed to be a six part series where the "goal is to show that 'Business-Process-Framework-Language' can be

instanciated as 'Web2.0-Agile-Rails-Ruby' in a very synergetic manner, but each of the three may have other goals."

The first part discusses the history of Java, Ruby, and Agile. I found it interesting and am looking forward to viewing the upcoming parts, so I thought I'd link it here.

Posted by Sam on Mar 29, 2007 at 09:07 AM UTC - 6 hrs

InfoQ has released yet another minibook of interest to me: Patterns of Agile Practice Adoption: The Technical Cluster.

The book discusses questions for the benefit of people who are trying to adopt Agile. In particular, they list "'Where do I start?', 'What specific practices should I adopt?', 'How can I adopt incrementally?' and 'Where can I expect pitfalls?'" as some of the main ones people have.

Browsing the Table of Contents, it looks like the patterns they discuss are many of those you'd expect that people with good development practices are familiar with: automated unit and functional testing, continuous integration, simple design, and collective code ownership. I haven't yet taken the time to read it, but I plan to when I've got some free time. In any case, it looks like at least it would be a good introduction to these things for those who are interested.

Last modified on Mar 29, 2007 at 09:09 AM UTC - 6 hrs

Posted by Sam on Feb 09, 2007 at 08:54 AM UTC - 6 hrs

I think that as developers, we too often ignore business objectives and the driving forces behind the projects on which we work. Because I'd like to know more about how to think and analyze in those terms, I decided to take a course about Management Information Systems this semester in grad school. One of the papers we read particularly stuck with me, so I thought I'd share the part that did: When we undertake a risky project (aren't they all?), we should consider what competitive advantage it will give it, and if that advantage is sustainable.

To measure sustainability, Blake Ives (from University of Houston) and Gabriel Piccoli (from Cornell) identify four barriers to erosion of the advantage (this is within a framework they present in the paper, which is worth reading). The barriers are driven by "response-lag drivers," which the authors define as "characteristics of the firm, its competitors, the technology, and the value system in which the firm is embedded that contribute to raise and strengthen barriers to erosion." In any case, on to the four things we should consider:

More...

-

IT Resources Barrier: This barrier is given by IT assets and capabilities. How flexible is your IT infrastructure? Better than your competitors? How good are your technical people's skills, including management? How good is the relationship between business users and IS developers?

-

Complementary Resources Barrier: They use the example of Harrah's using its "national network of casinos to capture drive-in traffic and foster

cross-selling" between locations as a perfect example. They also note that these complementary resources need not be considered assets, and could even be liabilities, which, when teamed with the right project could turn into assets.

-

IT project barrier: This includes the characteristics of the new technology - how visible, complex, and unique it is. Further, it adds the implementation' complexity and process change. Can your competitors readily see your new technology? How complex is it to build? Is it just off-the-shelf software that a competitor could buy as well, or is it proprietary? How complex is it to implement (is it as simple as Word, or is it a complete ERP system), and how much will your daily business processes need to evolve in the implementation of change?

-

Preemption Barrier: Whereas for the most part we can control the first three barriers within our own organization, or more importantly, a competitor theirs, this is less easy to control, but should be easy enough to predict (I don't think they say that, but based on the description, I think it would be). The idea here "focuses on the question of whether, even after successful imitation has occurred, the leader’s position of competitive advantage can be threatened." What are the switching costs for users of your application? Are they high enough to prevent them from leaving if a competitor was able to imitate your system? It also includes the structure of the value system: do they need another provider of this service (relationship exclusivity)? The authors use the example that you typically have only one mutual fund investment provider. Finally, is the link you are serving with the project in the value chain concentrated enough that you can capture most of the market and "lock-out" your competitors?

I couldn't find the paper online (for free), but if you are interested, you can find it at MIS Quarterly.

Last modified on Feb 09, 2007 at 09:00 AM UTC - 6 hrs

Posted by Sam on Feb 08, 2007 at 10:19 AM UTC - 6 hrs

Agile Houston is having their first meeting tonight. I had wanted to make it (and still will if I find time), but I've got a homework deadline tonight. I just wanted to help spread the word. If you are in Houston and aren't on their email list, you can find it here (for general discussion) or here (for announcements only). You can get details on the event (which is being held @ 6:30 PM at Ziggy's Health Grill) on their website.

For those of you far outside of Houston (Texas, USA), I apologize for boring you with useless information. =) It has been my specialty lately.

Last modified on Feb 08, 2007 at 10:20 AM UTC - 6 hrs

Posted by Sam on Feb 07, 2007 at 11:13 AM UTC - 6 hrs

If you are using version control, what is your setup like, and why did you choose to do it that way?

In Java development, I've got a repository, and I checkout the code to my desktop, check it back in, etc. So, there is no version control on the production server, though. Of course, there is no point (that I see) to have it on the production server, since you don't need many of your source files, but only the compiled classes (I'm assuming web development here).

But in CF, you'll be using all your source files as the actual files to be run, so you might very well set up source control on the production server, and have that be your main code base (say, checking it out to your development server and editing it from there, or to your desktop). Or, do you simply run the version control on your development server, and FTP any changes to production?

There are other ways, to be sure, so if you're using version control on your ColdFusion projects, I'd like to know the different ways you have it set up, and why you chose to do it that way. Any help is appreciated!

Last modified on Feb 07, 2007 at 11:13 AM UTC - 6 hrs

Posted by Sam on Dec 12, 2006 at 11:25 AM UTC - 6 hrs

I finally got around to watching Ron Jeffries' interview at InfoQ. Most of this is not groundbreaking new material for programmers/managers/stakeholders/vampirebats who ship "running tested features" on an short iterative basis. But, he said two things that really hit home for me:

If we fall behind on our design, what happens is: our velocity, instead of going up, will begin to tail off and ultimately crash. Almost every software developer at one time or another has worked on a program where he wants to say : "I can't put another feature into this; this program's design is so horrible that I can't add any more features to it, it's incredibly difficult."

and

It's a choice: now, I don't do everything I ought to do, or even everything that I'm quite confident that I have to do, and I don't think anyone does. But the more skill we could bring to this project, and the more we learn how to put those skills togetherwith other people's skills, I think that enables us to come around to do what we have to do.

How true! It's worth the 23 or so minutes it takes to watch it. Anyway, you can find the interview here.

Posted by Sam on Oct 10, 2006 at 09:58 AM UTC - 6 hrs

Susan K. Land, IEEE 2nd Vice President and key contributor on the America's Army project, visited the University of Houston yesterday and gave a talk about how the AA project went from complete chaos to having a major successful release in seven months.

In a nutshell, they went from zero process (CMM Level one) to a repeatable success, CMM Level Two. This was especially impressive because the game is being developed for both public and government use, and by several different development centers around the US (I remember counting more than 10, I think). She mentioned that at one point it was so bad that when one of the companies who hosted the source repository wasn't being funded, they shut off access to it. I wanted to ask why they did business with such a company, but I thought it prudent not to seem like a jerk. (We have a government client and they just renewed their contract about four months too late, but we didn't shut down their service. We just know they work slow.)

All in all this release took seven months.

I was interested in knowing how the developers and managers responded to going from no structure, to one complete with requirements gathering, detailed analysis, coding and testing (all in separate phases, from how she described it). She said the managers were weary at first, but if the project was a success, they would be happy (it was a success). Regarding the developers, she said they actually loved it - and I can certainly see how that was the case, having worked ad-hoc before. However, I'm not a big fan of how they went about the process. She said they spent about one-and-a-half months getting the requirements down, 2.5 on detailed design, 1.5 in coding, and the rest in testing. I don't know if she meant it to sound like waterfall (she said they were more in a spiral, but it didn't sound like it), but I wondered what the "coders" were doing in the rest of the time. Were they designing?

I meant to ask if she thought it may have reduced some risk by designing some, then coding some, and so forth, but time was running low and I didn't want to be offensive. In the end, the project was a success, and if the new process got them there (which, it certainly sounds like it did), then you certainly have to tip your hat.

So hat's off to America's Army!

Last modified on Oct 10, 2006 at 10:00 AM UTC - 6 hrs

|

Me

|